Building Named Entity Recognition and Relationship Extraction Components with HuggingFace Transformers

Editor’s note: Sujit Pal is a speaker for ODSC East 2022. Be sure to check out his talk, “Transformer Based Approaches to Named Entity Recognition (NER) and Relationship Extraction (RE),” there!

Named Entity Recognition (NER) is the process of identifying word or phrase spans in unstructured text (the entity) and classifying them as belonging to a particular class (the entity type). Relation Extraction (RE) is the process of identifying relationships implied in the text between a pair of entities. NER and RE are foundational for many Natural Language Processing (NLP) tasks.

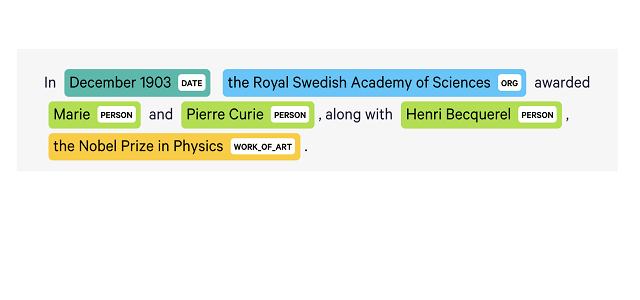

For example, for the sentence shown in Figure 1, the NER process has detected that Marie Curie, Pierre Curie, and Henri Becquerel are entities of type PERSON, the Royal Swedish Academy of Sciences is an entity of type ORGanization, and the Nobel Prize in Physics is an object (but misclassified as a WORK_OF_ART).

Figure 1: A sentence annotated with entities found by a Named Entity Recognition (NER) component

The NER process has many applications in NLP and search. For example, in search applications, identifying entities means that the user can now search for “things, not strings,” and thereby get more relevant search results. Applied over a large collection of documents, NER can help reduce the unstructured text to a list of entities and their counts, thereby allowing clustering or summarization to help humans understand their contents. The output of NER can also be input into other processes, for example, a coreference resolver that disambiguates the span “Marie” in “Marie and Pierre Curie” to entity “Marie Curie.”

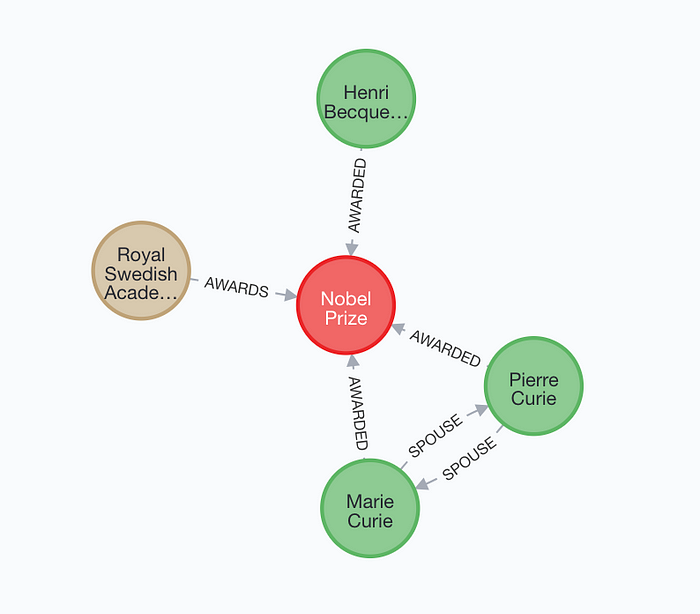

Similarly, an RE process on the same sentence might detect relations between the “Nobel Prize” entity and the “Royal Swedish Academy entity” on one hand, and the PERSON entities for “Marie Curie”, “Pierre Curie” and “Henri Becquerel” as shown in the graph in Figure 2. It might even infer that “Marie Curie” and “Pierre Curie” are married from the “Marie and Pierre Curie” span.

Figure 2: Relations detected by a Relation Extraction (RE) component applied to the same sentence

As with NER, the RE process also extracts structured information from unstructured text. When applied over a large collection of text that has gone through the NER process, the RE process can extract graphs (called Knowledge Graphs) similar to shown in Figure 2, but of much greater complexity. Knowledge Graphs can be used for querying directly, reasoning about the entities in the graph, and to power recommendation and exploration engines.

What is considered an interesting entity or relation usually varies widely by application domain. As a result, while there do exist some good pre-trained models for NER and RE, they are likely to be trained on entities and relations that are not of interest in your domain. Therefore, it may often be necessary to train your own model, with labeled data from your own domain.

Early models for NER and RE were heavily dependent on feature engineering, which meant that one needed considerable domain expertise to build NER and RE models. Deep Learning-based neural models substituted compute for feature engineering, allowing the model to explore the feature space and find the best settings using Gradient Descent. However, neural models typically require much more labeled data to train. Given that labeled data creation is manual, labor-intensive, and error-prone, and often requires domain expertise, it becomes an expensive proposition to produce enough labeled data to train neural models.

The emergence of Transformers in 2017 changed that calculus. Transformers are a novel Encoder-Decoder architecture aimed at solving sequence to sequence tasks, and handling long-range dependencies better than the current leading architecture (RNNs), through a process called self-attention. Shortly thereafter, pre-trained language models based on the Transformer architecture, such as BERT, appeared on the scene, an event that has been called the “ImageNet moment for NLP”.

The BERT architecture consisted of multiple Transformer Encoders stacked together, and was trained in a self-supervised manner on massive datasets. As a result, it gained a statistical understanding of language and could function as a Language Model. Pre-training is an expensive and time-consuming process. Fortunately, many variants of pre-trained BERT models, pre-trained on different kinds of datasets (legal, biomedical, scientific), as well as many model variants with improved performance characteristics (RoBERTa, ALBERT, DistilBERT) have been pre-trained by large organizations and made available for general use.

These pre-trained models can be fine-tuned for downstream tasks such as NER and RE for specialized domains. Because these models already encode a lot of knowledge about the language on which they were trained, fine-tuning them requires relatively smaller amounts of labeled training data to deliver equivalent levels of performance. As neural models, they do not need feature engineering expertise to build. Finally, the Transformers library from Hugging Face has made using these Transformer models in your code almost as easy as any linear, convolutional or recurrent layer. Overall, the approach of fine-tuning these massive pre-trained language models represents a good middle ground for building specialized NER and RE components.

If you found this post interesting and are interested in learning more, please attend my tutorial on Transformer based approaches to NER and RE at ODSC East 2022, At the tutorial, I will cover the evolution of NER and RE components from traditional to neural to transformer-based models, and we will work together to build and train NER and RE components using Pytorch and the Hugging Face transformers library. Look forward to seeing you there!

About the author/ODSC East 2022 Speaker:

Sujit Pal is a Technology Research Director at Elsevier Labs, helping to build technology that assists scientists to make breakthroughs and clinicians save lives. His primary interests are information retrieval, ontologies, natural language processing, machine learning, deep learning, and distributed processing.

Read more data science articles on OpenDataScience.com, including tutorials and guides from beginner to advanced levels! Subscribe to our weekly newsletter here and receive the latest news every Thursday. You can also get data science training on-demand wherever you are with our Ai+ Training platform. Subscribe to our fast-growing Medium Publication too, the ODSC Journal, and inquire about becoming a writer.