Linear Regression in Machine Learning

In every phase of our daily lives, we use different machine learning technologies to find appropriate solutions. These algorithms not only identify text, audio, video or images, but are quite instrumental in improving some other aspects that include the marketing sector, customer services, medical sectors, and a lot to add to the list. We all know that there are primarily two types of machine learning algorithms categorized as supervised and unsupervised machine learning algorithms. Our main focus in this blog will be to understand linear regression, which falls under supervised machine learning algorithms, and dive deep into its two forms.

So what do we mean by Linear Regression?

It is a statistical linear machine learning algorithm that is used for predictive analysis. Here, the predicted analysis is continuous and has a constant slope which is used to predict values within a continuous/real range such as salary, age, product, sales, price rather than trying to classify them into categories as petals, cat, dog.

Why switch to Linear Regression?

Linear regression algorithms show a linear relationship between a dependent variable, y, and one or more independent variables,x i.e., how the value of the dependent variable, y changes according to the value of the independent variable.

Now, let’s study the practical applications of Linear Regression. It is imperative to understand that linear regression is the most important and widely used statistical technique in both the business and economics world. So, for better understanding, some real-world applications of linear regression include:

Case 1: Fruit yield is a dependent variable on Rainfall which is an explanatory variable, i.e., the fruit production depends on the amount of rainfall throughout the year.

Case 2: A firm decides to check out the relationship between advertising and sales, which can be modeled by the use of linear regression.

Case 3: The estimation of the price of a house depending on the number of rooms it has increases or decreases.

Now, let try to shed some light on the assumptions that linear regression makes about the data sets it gets applied to.

- Autocorrelation: This assumption takes place when residual errors are dependent on each other and indicates little to no autocorrelation in data.

- Multicollinearity: This assumption happens when independent variables show some dependency. It states that data multicollinearity either doesn’t exist at all or is present scarcely.

- Variable relationship: This assumption shows that there is a linear relationship between feature variables and response variables.

Linear Regression is categorized into two types namely simple linear regression and multivariable regression.

1. Simple linear regression: It uses a single independent variable to predict the value of a numerical dependent variable in a traditional slope-intercept form.

To make the study of our linear regression more enjoyable, let’s learn to implement it using python programming.

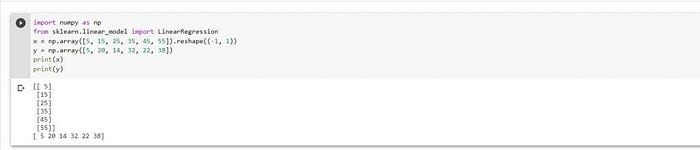

Step 1: The first step is to import the package numpy and the class LinearRegression from sklearn.linear_model. The second step is to define the data where the inputs (regressors, 𝑥) and output (predictor, 𝑦) should be arrays or similar objects.

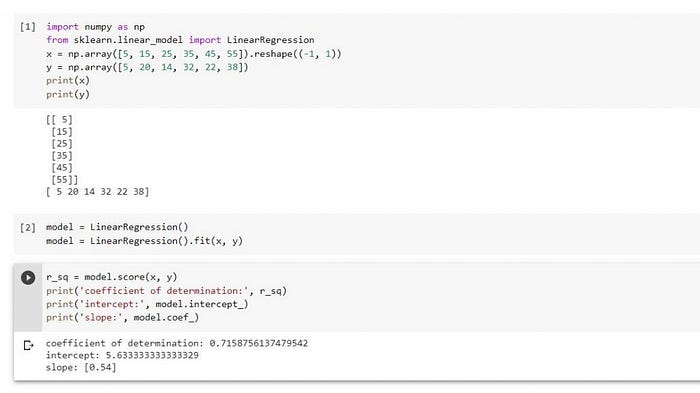

Step 2: The next step is to create a linear regression model and fit it using the existing data. Foremostly, we need to call .fit() on model:

Step 3: Once we are done with fitting our model, we can get the results to check whether the model works satisfactorily and then interpret it. We can obtain the coefficient of determination (𝑅²) with .score() called on model, and then find intercept and slope of the model as stated below.

2. Multiple Linear regression: If more than one independent variable is used to predict the value of a numerical dependent variable, then it is known as Multiple Linear Regression. Let’s understand its implementation using Python.

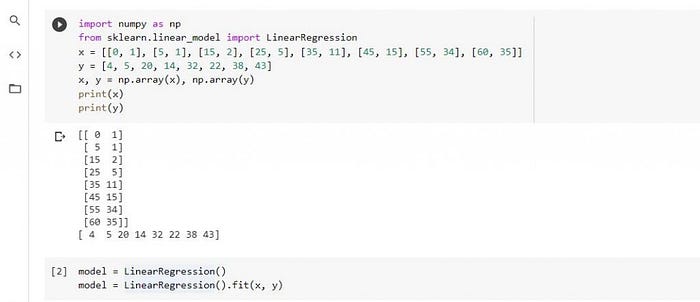

Step 1: First, we need to import the necessary libraries which includes numpy and sklearn.linear_model.LinearRegression and provide known inputs and output.

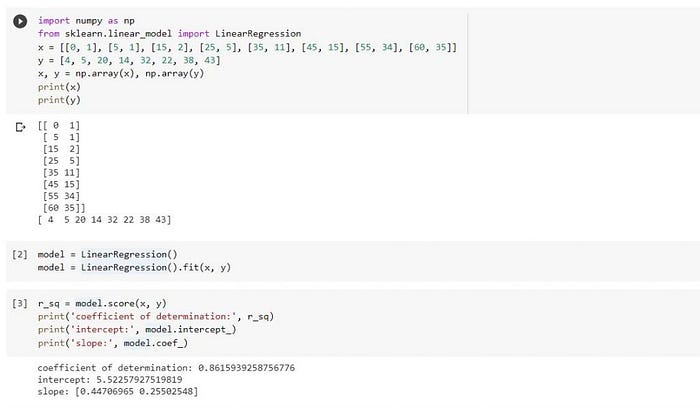

Step 2: The very next step is to create the regression model as an instance of LinearRegression and fit it with .fit().

Step 3: Now, it is the final step to obtain the properties of the model the same way as we have done for simple linear regression.

Summary

From our reading, we can conclude that Linear regression is perhaps one of the most well-known and well-understood algorithms in statistics and machine learning. We need not know what is statistics or linear algebra to master in Linear Regression. In this post, we have discovered its meaning in a layman’s understanding, and have checked out its benefits and some real-life examples. We have also covered the two types of Linear Regression algorithms and their implementation using Python. I hope this blog has provided my readers some basic knowledge to be able to solve any regression problems effectively.

Read more data science articles on OpenDataScience.com, including tutorials and guides from beginner to advanced levels! Subscribe to our weekly newsletter here and receive the latest news every Thursday. You can also get data science training on-demand wherever you are with our Ai+ Training platform.