Mastering RAG: Enhancing AI Applications with Retrieval-Augmented Generation

Retrieval-augmented generation (RAG) has emerged as a transformative approach for building AI systems capable of delivering accurate, contextually relevant responses. In a recent webinar, Sheamus McGovern, founder of ODSC and head of AI at Cortical Ventures, alongside data engineer Ali Hesham, shared their expertise on mastering RAG and constructing robust RAG systems.

Understanding the Need for RAG

While enterprise search systems have long facilitated data retrieval, they presented limitations. Relational databases like Postgres and Oracle were effective for structured data but required technical proficiency. Search tools like Elastic Search and Solr offered robust solutions for querying unstructured information, but Natural Language Processing (NLP) techniques such as TF-IDF and BM25 often lacked contextual understanding. These systems struggled to adapt to evolving taxonomies and often resulted in stale data.

RAG addresses these challenges by integrating retrieval mechanisms with generative language models. This approach allows AI applications to interpret natural language queries, retrieve relevant data, and generate human-like responses grounded in accurate information.

How RAG Operates

RAG systems bridge the gap between traditional retrieval-based search and generative AI. When a user inputs a query, an LLM (large language model) interprets it using Natural Language Understanding (NLU). The system retrieves pertinent documents from a data index and generates a response through Natural Language Generation (NLG). This end-to-end process enhances precision and relevance, unlike older systems limited to keyword matching.

RAG vs. Traditional LLMs

Traditional LLMs generate outputs based on pre-trained data, which can result in hallucinations or outdated information. They lack access to private enterprise data and real-time updates. RAG systems mitigate these shortcomings by retrieving up-to-date information from proprietary data sources, reducing inaccuracies, and improving contextual relevance. While traditional models rely solely on broad training datasets, RAG ensures responses are grounded in the most current and specific data available.

The RAG Stack: Data to Response

A RAG system begins with data ingestion from multiple sources, including structured data from relational databases, unstructured data from NoSQL databases, and programmatic data via APIs. This data undergoes processing into machine-readable formats and is stored as vector embeddings in an index using tools like Pinecone, Weaviate, or Milvus.

Upon receiving a user query, the LLM performs NLU, retrieves relevant embeddings, and generates a contextually accurate response. This process involves:

- Data acquisition and preparation

- Embedding generation

- Storing embeddings in vector stores

- Querying and retrieval

- Evaluating results

Indexing and Embeddings

Indexing transforms data into a searchable structure, while embeddings represent text as high-dimensional vectors, capturing semantic meaning. Techniques like cosine similarity assess the closeness between a user query and indexed data. Pre-trained models are often employed for embeddings, with adjustments made to align with domain-specific requirements.

Chunking long documents into smaller text segments can enhance embedding quality and retrieval efficiency. Testing different chunk sizes and embedding methods is crucial to ensure the system accurately captures relationships within the data.

Ranking and Re-ranking

Effective information retrieval requires prioritizing the most relevant results. Ranking involves assigning similarity scores to retrieved documents, often using cosine similarity. Re-ranking techniques further refine this process, leveraging transformer-based models to enhance the contextual alignment of top-ranked results. Hybrid approaches combining multiple ranking methods can optimize performance.

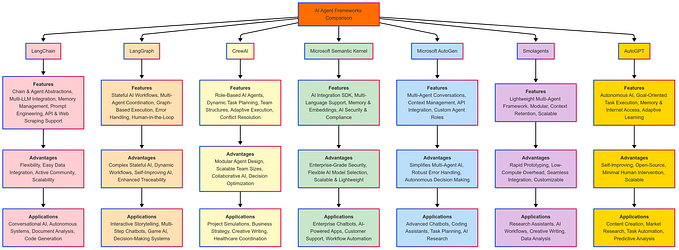

RAG Tools and Frameworks

Building a RAG system often involves integrating multiple tools. Popular LLM providers include OpenAI, Anthropic, and open-source models like Llama 3.5 and Mistral. Frameworks such as LlamaIndex and LangChain facilitate orchestration, embedding, retrieval, and evaluation processes. Privacy, performance, and domain-specific requirements dictate the choice of models and frameworks.

Evaluating RAG Systems

Evaluation is critical to ensure RAG systems deliver reliable outputs. Metrics like accuracy, fidelity, response time, and user satisfaction inform system performance. Human evaluation, automated scoring methods like BLEU, and A/B testing help assess quality. Regular evaluation prevents data drift and model degradation, ensuring the system remains aligned with evolving data sources.

Practical Demonstration

Ali Hesham provided a hands-on demonstration, showcasing a RAG system built around a medical research paper. The demonstration involved data loading, text splitting, chunking, embedding, and indexing. A retriever was built, and a re-ranker powered by Cohere’s model refined the results. This example illustrated the practical steps involved in constructing an RAG pipeline, emphasizing the need for API keys from providers like OpenAI and Cohere.

Additional Considerations

Caching mechanisms like Redis can reduce query latency. Embedding updates and re-indexing may require batching or real-time streaming, depending on the chosen vector store. Multi-tenant RAG systems leverage role-based access control, while small language models (SLMs) offer privacy advantages by enabling on-device deployments. Encryption safeguards data during transmission.

RAG systems represent a pivotal advancement in AI-driven information retrieval. By combining retrieval and generative capabilities, they enable data professionals to develop applications that deliver precise, context-aware responses. As the landscape evolves, mastering RAG will be essential for organizations seeking to leverage AI to its fullest potential.